Welcome To My Blog

You Will Be Able To Answer :-

* What Is Artificial Intelligence ?

* What Is OCR ?

Optical character recognition or optical character reader (OCR) is the electronic or mechanical conversion of images of typed, handwritten or printed text into machine-encoded text, whether from a scanned document, a photo of a document, a scene-photo (for example the text on signs and billboards in a landscape photo) or from subtitle text superimposed on an image (for example: from a television broadcast).

Widely used as a form of data entry from printed paper data records – whether passport documents, invoices, bank statements, computerized receipts, business cards, mail, printouts of static-data, or any suitable documentation – it is a common method of digitizing printed texts so that they can be electronically edited, searched, stored more compactly, displayed on-line, and used in machine processes such as cognitive computing, machine translation, (extracted) text-to-speech, key data and text mining. OCR is a field of research in pattern recognition, artificial intelligence and computer vision.

Early versions needed to be trained with images of each character, and worked on one font at a time. Advanced systems capable of producing a high degree of recognition accuracy for most fonts are now common, and with support for a variety of digital image file format inputs. Some systems are capable of reproducing formatted output that closely approximates the original page including images, columns, and other non-textual components.

History[edit]

Early optical character recognition may be traced to technologies involving telegraphy and creating reading devices for the blind.[3] In 1914, Emanuel Goldberg developed a machine that read characters and converted them into standard telegraph code.[4] Concurrently, Edmund Fournier d'Albe developed the Optophone, a handheld scanner that when moved across a printed page, produced tones that corresponded to specific letters or characters.[5]

In the late 1920s and into the 1930s Emanuel Goldberg developed what he called a "Statistical Machine" for searching microfilm archives using an optical code recognition system. In 1931 he was granted USA Patent number 1,838,389 for the invention. The patent was acquired by IBM.

Blind and visually impaired users[edit]

In 1974, Ray Kurzweil started the company Kurzweil Computer Products, Inc. and continued development of omni-font OCR, which could recognize text printed in virtually any font (Kurzweil is often credited with inventing omni-font OCR, but it was in use by companies, including CompuScan, in the late 1960s and 1970s[3][6]). Kurzweil decided that the best application of this technology would be to create a reading machine for the blind, which would allow blind people to have a computer read text to them out loud. This device required the invention of two enabling technologies – the CCD flatbed scanner and the text-to-speech synthesizer. On January 13, 1976, the successful finished product was unveiled during a widely reported news conference headed by Kurzweil and the leaders of the National Federation of the Blind.[citation needed] In 1978, Kurzweil Computer Products began selling a commercial version of the optical character recognition computer program. LexisNexis was one of the first customers, and bought the program to upload legal paper and news documents onto its nascent online databases. Two years later, Kurzweil sold his company to Xerox, which had an interest in further commercializing paper-to-computer text conversion. Xerox eventually spun it off as Scansoft, which merged with Nuance Communications.

In the 2000s, OCR was made available online as a service (WebOCR), in a cloud computing environment, and in mobile applications like real-time translation of foreign-language signs on a smartphone. With the advent of smart-phones and smartglasses, OCR can be used in internet connected mobile device applications that extract text captured using the device's camera. These devices that do not have OCR functionality built into the operating system will typically use an OCR API to extract the text from the image file captured and provided by the device.[7][8] The OCR API returns the extracted text, along with information about the location of the detected text in the original image back to the device app for further processing (such as text-to-speech) or display.

Various commercial and open source OCR systems are available for most common writing systems, including Latin, Cyrillic, Arabic, Hebrew, Indic, Bengali (Bangla), Devanagari, Tamil, Chinese, Japanese, and Korean characters.

Applications[edit]

OCR engines have been developed into many kinds of domain-specific OCR applications, such as receipt OCR, invoice OCR, check OCR, legal billing document OCR.

They can be used for:

- Data entry for business documents, e.g. Cheque, passport, invoice, bank statement and receipt

- Automatic number plate recognition

- In airports, for passport recognition and information extraction

- Automatic insurance documents key information extraction[citation needed]

- Traffic sign recognition[9]

- Extracting business card information into a contact list[10]

- More quickly make textual versions of printed documents, e.g. book scanning for Project Gutenberg

- Make electronic images of printed documents searchable, e.g. Google Books

- Converting handwriting in real-time to control a computer (pen computing)

- Defeating CAPTCHA anti-bot systems, though these are specifically designed to prevent OCR.[11][12][13] The purpose can also be to test the robustness of CAPTCHA anti-bot systems.

- Assistive technology for blind and visually impaired users

- Writing the instructions for vehicles by identifying CAD images in a database that are appropriate to the vehicle design as it changes in real time.

- Making scanned documents searchable by converting them to searchable PDFs

Types[edit]

- Optical character recognition (OCR) – targets typewritten text, one glyph or character at a time.

- Optical word recognition – targets typewritten text, one word at a time (for languages that use a space as a word divider). (Usually just called "OCR".)

- Intelligent character recognition (ICR) – also targets handwritten printscript or cursive text one glyph or character at a time, usually involving machine learning.

- Intelligent word recognition (IWR) – also targets handwritten printscript or cursive text, one word at a time. This is especially useful for languages where glyphs are not separated in cursive script.

OCR is generally an "offline" process, which analyses a static document. There are cloud based services which provide an online OCR API service. Handwriting movement analysis can be used as input to handwriting recognition.[14] Instead of merely using the shapes of glyphs and words, this technique is able to capture motions, such as the order in which segments are drawn, the direction, and the pattern of putting the pen down and lifting it. This additional information can make the end-to-end process more accurate. This technology is also known as "on-line character recognition", "dynamic character recognition", "real-time character recognition", and "intelligent character recognition".

Techniques[edit]

Pre-processing[edit]

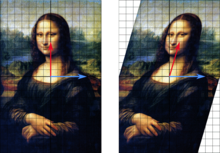

OCR software often "pre-processes" images to improve the chances of successful recognition. Techniques include:[15]

- De-skew – If the document was not aligned properly when scanned, it may need to be tilted a few degrees clockwise or counterclockwise in order to make lines of text perfectly horizontal or vertical.

- Despeckle – remove positive and negative spots, smoothing edges

- Binarisation – Convert an image from color or greyscale to black-and-white (called a "binary image" because there are two colors). The task of binarisation is performed as a simple way of separating the text (or any other desired image component) from the background.[16] The task of binarisation itself is necessary since most commercial recognition algorithms work only on binary images since it proves to be simpler to do so.[17] In addition, the effectiveness of the binarisation step influences to a significant extent the quality of the character recognition stage and the careful decisions are made in the choice of the binarisation employed for a given input image type; since the quality of the binarisation method employed to obtain the binary result depends on the type of the input image (scanned document, scene text image, historical degraded document etc.).[18][19]

- Line removal – Cleans up non-glyph boxes and lines

- Layout analysis or "zoning" – Identifies columns, paragraphs, captions, etc. as distinct blocks. Especially important in multi-column layouts and tables.

- Line and word detection – Establishes baseline for word and character shapes, separates words if necessary.

- Script recognition – In multilingual documents, the script may change at the level of the words and hence, identification of the script is necessary, before the right OCR can be invoked to handle the specific script.[20]

- Character isolation or "segmentation" – For per-character OCR, multiple characters that are connected due to image artifacts must be separated; single characters that are broken into multiple pieces due to artifacts must be connected.

- Normalize aspect ratio and scale[21]

Segmentation of fixed-pitch fonts is accomplished relatively simply by aligning the image to a uniform grid based on where vertical grid lines will least often intersect black areas. For proportional fonts, more sophisticated techniques are needed because whitespace between letters can sometimes be greater than that between words, and vertical lines can intersect more than one character.[22]

Text recognition[edit]

There are two basic types of core OCR algorithm, which may produce a ranked list of candidate characters.[23]

- Matrix matching involves comparing an image to a stored glyph on a pixel-by-pixel basis; it is also known as "pattern matching", "pattern recognition", or "image correlation". This relies on the input glyph being correctly isolated from the rest of the image, and on the stored glyph being in a similar font and at the same scale. This technique works best with typewritten text and does not work well when new fonts are encountered. This is the technique the early physical photocell-based OCR implemented, rather directly.

- Feature extraction decomposes glyphs into "features" like lines, closed loops, line direction, and line intersections. The extraction features reduces the dimensionality of the representation and makes the recognition process computationally efficient. These features are compared with an abstract vector-like representation of a character, which might reduce to one or more glyph prototypes. General techniques of feature detection in computer vision are applicable to this type of OCR, which is commonly seen in "intelligent" handwriting recognition and indeed most modern OCR software.[24] Nearest neighbour classifiers such as the k-nearest neighbors algorithm are used to compare image features with stored glyph features and choose the nearest match.[25]

Software such as Cuneiform and Tesseract use a two-pass approach to character recognition. The second pass is known as "adaptive recognition" and uses the letter shapes recognized with high confidence on the first pass to recognize better the remaining letters on the second pass. This is advantageous for unusual fonts or low-quality scans where the font is distorted (e.g. blurred or faded).[22]

Modern OCR software like for example OCRopus or Tesseract uses neural networks which were trained to recognize whole lines of text instead of focusing on single characters.

A new technique known as iterative OCR automatically crops a document into sections based on page layout. OCR is performed on the sections individually using variable character confidence level thresholds to maximize page-level OCR accuracy.[26]

The OCR result can be stored in the standardized ALTO format, a dedicated XML schema maintained by the United States Library of Congress. Other common formats include hOCR and PAGE XML.

For a list of optical character recognition software see Comparison of optical character recognition software.

Post-processing[edit]

OCR accuracy can be increased if the output is constrained by a lexicon – a list of words that are allowed to occur in a document.[15] This might be, for example, all the words in the English language, or a more technical lexicon for a specific field. This technique can be problematic if the document contains words not in the lexicon, like proper nouns. Tesseract uses its dictionary to influence the character segmentation step, for improved accuracy.[22]

The output stream may be a plain text stream or file of characters, but more sophisticated OCR systems can preserve the original layout of the page and produce, for example, an annotated PDF that includes both the original image of the page and a searchable textual representation.

"Near-neighbor analysis" can make use of co-occurrence frequencies to correct errors, by noting that certain words are often seen together.[27] For example, "Washington, D.C." is generally far more common in English than "Washington DOC".

Knowledge of the grammar of the language being scanned can also help determine if a word is likely to be a verb or a noun, for example, allowing greater accuracy.

The Levenshtein Distance algorithm has also been used in OCR post-processing to further optimize results from an OCR API.[28]

Application-specific optimizations[edit]

In recent years,[when?] the major OCR technology providers began to tweak OCR systems to deal more efficiently with specific types of input. Beyond an application-specific lexicon, better performance may be had by taking into account business rules, standard expression,[clarification needed] or rich information contained in colour images. This strategy is called "Application-Oriented OCR" or "Customized OCR", and has been applied to OCR of license plates, invoices, screenshots, ID cards, driver licenses, and automobile manufacturing.

The New York Times has adapted the OCR technology into a proprietary tool they entitle, Document Helper, that enables their interactive news team to accelerate the processing of documents that need to be reviewed. They note that it enables them to process what amounts to as many as 5,400 pages per hour in preparation for reporters to review the contents.[29]

Workarounds[edit]

There are several techniques for solving the problem of character recognition by means other than improved OCR algorithms.

Forcing better input[edit]

Special fonts like OCR-A, OCR-B, or MICR fonts, with precisely specified sizing, spacing, and distinctive character shapes, allow a higher accuracy rate during transcription in bank check processing. Ironically, however, several prominent OCR engines were designed to capture text in popular fonts such as Arial or Times New Roman, and are incapable of capturing text in these fonts that are specialized and much different from popularly used fonts. As Google Tesseract can be trained to recognize new fonts, it can recognize OCR-A, OCR-B and MICR fonts.[30]

"Comb fields" are pre-printed boxes that encourage humans to write more legibly – one glyph per box.[27] These are often printed in a "dropout colour " which can be easily removed by the OCR system.[27]

Palm OS used a special set of glyphs, known as "Graffiti" which are similar to printed English characters but simplified or modified for easier recognition on the platform's computationally limited hardware. Users would need to learn how to write these special glyphs.

Zone-based OCR restricts the image to a specific part of a document. This is often referred to as "Template OCR".

Crowdsourcing[edit]

Crowdsourcing humans to perform the character recognition can quickly process images like computer-driven OCR, but with higher accuracy for recognizing images than is obtained with computers. Practical systems include the Amazon Mechanical Turk and reCAPTCHA. The National Library of Finland has developed an online interface for users to correct OCRed texts in the standardized ALTO format.[31] Crowd sourcing has also been used not to perform character recognition directly but to invite software developers to develop image processing algorithms, for example, through the use of rank-order tournaments.[32]

Accuracy[edit]

Recognition of Latin-script, typewritten text is still not 100% accurate even where clear imaging is available. One study based on recognition of 19th- and early 20th-century newspaper pages concluded that character-by-character OCR accuracy for commercial OCR software varied from 81% to 99%;[34] total accuracy can be achieved by human review or Data Dictionary Authentication. Other areas—including recognition of hand printing, cursive handwriting, and printed text in other scripts (especially those East Asian language characters which have many strokes for a single character)—are still the subject of active research. The MNIST database is commonly used for testing systems' ability to recognise handwritten digits. Commissioned by the U.S. Department of Energy (DOE), the Information Science Research Institute (ISRI) had the mission to foster the improvement of automated technologies for understanding machine printed documents, and it conducted the most authoritative of the Annual Test of OCR Accuracy from 1992 to 1996.[33]

Accuracy rates can be measured in several ways, and how they are measured can greatly affect the reported accuracy rate. For example, if word context (basically a lexicon of words) is not used to correct software finding non-existent words, a character error rate of 1% (99% accuracy) may result in an error rate of 5% (95% accuracy) or worse if the measurement is based on whether each whole word was recognized with no incorrect letters.[35] Using a large enough dataset is so important in a neural network based handwriting recognition solutions. On the other hand, producing natural datasets is very complicated and time-consuming.[36]

An example of the difficulties inherent in digitizing old text is the inability of OCR to differentiate between the "long s" and "f" characters.[37]

Web-based OCR systems for recognizing hand-printed text on the fly have become well known as commercial products in recent years[when?] (see Tablet PC history). Accuracy rates of 80% to 90% on neat, clean hand-printed characters can be achieved by pen computing software, but that accuracy rate still translates to dozens of errors per page, making the technology useful only in very limited applications.[citation needed]

Recognition of cursive text is an active area of research, with recognition rates even lower than that of hand-printed text. Higher rates of recognition of general cursive script will likely not be possible without the use of contextual or grammatical information. For example, recognizing entire words from a dictionary is easier than trying to parse individual characters from script. Reading the Amount line of a cheque (which is always a written-out number) is an example where using a smaller dictionary can increase recognition rates greatly. The shapes of individual cursive characters themselves simply do not contain enough information to accurately (greater than 98%) recognize all handwritten cursive script.[citation needed]

Most programs allow users to set "confidence rates". This means that if the software does not achieve their desired level of accuracy, a user can be notified for manual review.

An error introduced by OCR scanning is sometimes termed a "scanno" (by analogy with the term "typo").[

Unicode[edit]

Characters to support OCR were added to the Unicode Standard in June 1993, with the release of version 1.1.

* What Is Face Recognition ?

Automated facial recognition was pioneered in the 1960s. Woody Bledsoe, Helen Chan Wolf, and Charles Bisson worked on using the computer to recognize human faces. Their early facial recognition project was dubbed "man-machine" because the coordinates of the facial features in a photograph had to be established by a human before they could be used by the computer for recognition. On a graphics tablet a human had to pinpoint the coordinates of facial features such as the pupil centers, the inside and outside corner of eyes, and the widows peak in the hairline. The coordinates were used to calculate 20 distances, including the width of the mouth and of the eyes. A human could process about 40 pictures an hour in this manner and so build a database of the computed distances. A computer would then automatically compare the distances for each photograph, calculate the difference between the distances and return the closed records as a possible match.[3]

In 1970, Takeo Kanade publicly demonstrated a face matching system that located anatomical features such as the chin and calculated the distance ratio between facial features without human intervention. Later tests revealed that the system could not always reliably identify facial features. Nonetheless, interest in the subject grew and in 1977 Kanade published the first detailed book on facial recognition technology.[4]

In 1993, the Defense Advanced Research Project Agency (DARPA) and the Army Research Laboratory (ARL) established the face recognition technology program FERET to develop "automatic face recognition capabilities" that could be employed in a productive real life environment "to assist security, intelligence, and law enforcement personnel in the performance of their duties." Face recognition systems that had been trialed in research labs were evaluated and the FERET tests found that while the performance of existing automated facial recognition systems varied, a handful of existing methods could viably be used to recognize faces in still images taken in a controlled environment.[5] The FERET tests spawned three US companies that sold automated facial recognition systems. Vision Corporation and Miros Inc were both founded in 1994, by researchers who used the results of the FERET tests as a selling point. Viisage Technology was established by a identification card defense contractor in 1996 to commercially exploit the rights to the facial recognition algorithm developed by Alex Pentland at MIT.[6]

Following the 1993 FERET face recognition vendor test the Department of Motor Vehicles (DMV) offices in West Virginia and New Mexico were the first DMV offices to use automated facial recognition systems as a way to prevent and detect people obtaining multiple driving licenses under different names. Driver's licenses in the United States were at that point a commonly accepted from of photo identification. DMV offices across the United States were undergoing a technological upgrade and were in the process of establishing databases of digital ID photographs. This enabled DMV offices to deploy the facial recognition systems on the market to search photographs for new driving licenses against the existing DMV database.[7] DMV offices became one of the first major markets for automated facial recognition technology and introduced US citizens to facial recognition as a standard method of identification.[8] The increase of the US prison population in the 1990s prompted U.S. states to established connected and automated identification systems that incorporated digital biometric databases, in some instances this included facial recognition. In 1999 Minnesota incorporated the facial recognition system FaceIT by Visionics into a mug shot booking system that allowed police, judges and court officers to track criminals across the state.[9]

Until the 1990s facial recognition systems were developed primarily by using photographic portraits of human faces. Research on face recognition to reliably locate a face in an image that contains other objects gained traction in the early 1990s with the principle component analysis (PCA). The PCA method of face detection is also known as Eigenface and was developed by Matthew Turk and Alex Pentland.[10] Turk and Pentland combined the conceptual approach of the Karhunen–Loève theorem and factor analysis, to develop a linear model. Eigenfaces are determined based on global and orthogonal features in human faces. A human face is calculated as a weighted combination of a number of Eigenfaces. Because few Eigenfaces were used to encode human faces of a given population, Turk and Pentland's PCA face detection method greatly reduced the amount of data that had to be processed to detect a face. Pentland in 1994 defined Eigenface features, including eigen eyes, eigen mouths and eigen noses, to advance the use of PCA in facial recognition. In 1997 the PCA Eigenface method of face recognition[11] was improved upon using linear discriminant analysis (LDA) to produce Fisherfaces.[12] LDA Fisherfaces became dominantly used in PCA feature based face recognition. While Eigenfaces were also used for face reconstruction. In these approaches no global structure of the face is calculated which links the facial features or parts.[13]

Purely feature based approaches to facial recognition were overtaken in the late 1990s by the Bochum system, which used Gabor filter to record the face features and computed a grid of the face structure to link the features.[14] Christoph von der Malsburg and his research team at the University of Bochum developed Elastic Bunch Graph Matching in the mid 1990s to extract a face out of an image using skin segmentation.[15] By 1997 the face detection method developed by Malsburg outperformed most other facial detection systems on the market. The so-called "Bochum system" of face detection was sold commercially on the market as ZN-Face to operators of airports and other busy locations. The software was "robust enough to make identifications from less-than-perfect face views. It can also often see through such impediments to identification as mustaches, beards, changed hairstyles and glasses—even sunglasses".[16]

Real-time face detection in video footage became possible in 2001 with the Viola–Jones object detection framework for faces.[17] Paul Viola and Michael Jones combined their face detection method with the Haar-like feature approach to object recognition in digital images to launch AdaBoost, the first real-time frontal-view face detector.[18] By 2015 the Viola-Jones algorithm had been implemented using small low power detectors on handheld devices and embedded systems. Therefore, the Viola-Jones algorithm has not only broadened the practical application of face recognition systems but has also been used to support new features in user interfaces and teleconferencing.[19]

Techniques for face recognition[edit]

While humans can recognize faces without much effort,[20] facial recognition is a challenging pattern recognition problem in computing. Facial recognition systems attempt to identify a human face, which is three-dimensional and changes in appearance with lighting and facial expression, based on its two-dimensional image. To accomplish this computational task, facial recognition systems perform four steps. First face detection is used to segment the face from the image background. In the second step the segmented face image is aligned to account for face pose, image size and photographic properties, such as illumination and grayscale. The purpose of the alignment process is to enable the accurate localization of facial features in the third step, the facial feature extraction. Features such as eyes, nose and mouth are pinpointed and measured in the image to represent the face. The so established feature vector of the face is then, in the fourth step, matched against a database of faces.[21]

Traditional[edit]

Some face recognition algorithms identify facial features by extracting landmarks, or features, from an image of the subject's face. For example, an algorithm may analyze the relative position, size, and/or shape of the eyes, nose, cheekbones, and jaw.[22] These features are then used to search for other images with matching features.[23]

Other algorithms normalize a gallery of face images and then compress the face data, only saving the data in the image that is useful for face recognition. A probe image is then compared with the face data.[24] One of the earliest successful systems[25] is based on template matching techniques[26] applied to a set of salient facial features, providing a sort of compressed face representation.

Recognition algorithms can be divided into two main approaches: geometric, which looks at distinguishing features, or photo-metric, which is a statistical approach that distills an image into values and compares the values with templates to eliminate variances. Some classify these algorithms into two broad categories: holistic and feature-based models. The former attempts to recognize the face in its entirety while the feature-based subdivide into components such as according to features and analyze each as well as its spatial location with respect to other features.[27]

Popular recognition algorithms include principal component analysis using eigenfaces, linear discriminant analysis, elastic bunch graph matching using the Fisherface algorithm, the hidden Markov model, the multilinear subspace learning using tensor representation, and the neuronal motivated dynamic link matching.[citation needed][28]

Human identification at a distance (HID)[edit]

To enable human identification at a distance (HID) low-resolution images of faces are enhanced using face hallucination. In CCTV imagery faces are often very small. But because facial recognition algorithms that identify and plot facial features require high resolution images, resolution enhancement techniques have been developed to enable facial recognition systems to work with imagery that has been captured in environments with a high signal-to-noise ratio. Face hallucination algorithms that are applied to images prior to those images being submitted to the facial recognition system utilise example-based machine learning with pixel substitution or nearest neighbour distribution indexes that may also incorporate demographic and age related facial characteristics. Use of face hallucination techniques improves the performance of high resolution facial recognition algorithms and may be used to overcome the inherent limitations of super-resolution algorithms. Face hallucination techniques are also used to pre-treat imagery where faces are disguised. Here the disguise, such as sunglasses, is removed and the face hallucination algorithm is applied to the image. Such face hallucination algorithms need to be trained on similar face images with and without disguise. To fill in the area uncovered by removing the disguise, face hallucination algorithms need to correctly map the entire state of the face, which may be not possible due to the momentary facial expression captured in the low resolution image.[29]

3-dimensional recognition[edit]

Three-dimensional face recognition technique uses 3D sensors to capture information about the shape of a face. This information is then used to identify distinctive features on the surface of a face, such as the contour of the eye sockets, nose, and chin.[30] One advantage of 3D face recognition is that it is not affected by changes in lighting like other techniques. It can also identify a face from a range of viewing angles, including a profile view.[30][23] Three-dimensional data points from a face vastly improve the precision of face recognition. 3D-dimensional face recognition research is enabled by the development of sophisticated sensors that project structured light onto the face.[31] 3D matching technique are sensitive to expressions, therefore researchers at Technion applied tools from metric geometry to treat expressions as isometries.[32] A new method of capturing 3D images of faces uses three tracking cameras that point at different angles; one camera will be pointing at the front of the subject, second one to the side, and third one at an angle. All these cameras will work together so it can track a subject's face in real-time and be able to face detect and recognize.[33]

Thermal cameras[edit]

A different form of taking input data for face recognition is by using thermal cameras, by this procedure the cameras will only detect the shape of the head and it will ignore the subject accessories such as glasses, hats, or makeup.[34] Unlike conventional cameras, thermal cameras can capture facial imagery even in low-light and nighttime conditions without using a flash and exposing the position of the camera.[35] However, the databases for face recognition are limited. Efforts to build databases of thermal face images date back to 2004.[34] By 2016 several databases existed, including the IIITD-PSE and the Notre Dame thermal face database.[36] Current thermal face recognition systems are not able to reliably detect a face in a thermal image that has been taken of an outdoor environment.[37]

In 2018, researchers from the U.S. Army Research Laboratory (ARL) developed a technique that would allow them to match facial imagery obtained using a thermal camera with those in databases that were captured using a conventional camera.[38] Known as a cross-spectrum synthesis method due to how it bridges facial recognition from two different imaging modalities, this method synthesize a single image by analyzing multiple facial regions and details.[39] It consists of a non-linear regression model that maps a specific thermal image into a corresponding visible facial image and an optimization issue that projects the latent projection back into the image space.[35] ARL scientists have noted that the approach works by combining global information (i.e. features across the entire face) with local information (i.e. features regarding the eyes, nose, and mouth).[40] According to performance tests conducted at ARL, the multi-region cross-spectrum synthesis model demonstrated a performance improvement of about 30% over baseline methods and about 5% over state-of-the-art methods.[39]

Application[edit]

Social media[edit]

Founded in 2013, Looksery went on to raise money for its face modification app on Kickstarter. After successful crowdfunding, Looksery launched in October 2014. The application allows video chat with others through a special filter for faces that modifies the look of users. Image augmenting applications already on the market, such as FaceTune and Perfect365, were limited to static images, whereas Looksery allowed augmented reality to live videos. In late 2015 SnapChat purchased Looksery, which would then become its landmark lenses function.[41] Snapchat filter applications use face detection technology and on the basis of the facial features identified in an image a 3D mesh mask is layered over the face.[42]

DeepFace is a deep learning facial recognition system created by a research group at Facebook. It identifies human faces in digital images. It employs a nine-layer neural net with over 120 million connection weights, and was trained on four million images uploaded by Facebook users.[43][44] The system is said to be 97% accurate, compared to 85% for the FBI's Next Generation Identification system.[45]

TikTok's algorithm has been regarded as especially effective, but many were left to wonder at the exact programming that caused the app to be so effective in guessing the user's desired content.[46] In June 2020, Tiktok released a statement regarding the "For You" page, and how they recommended videos to users, which did not include facial recognition.[47] In February 2021, however, Tiktok agreed to a $92 million settlement to a US lawsuit which alleged that the app had used facial recognition in both user videos and its algorithm to identify age, gender and ethnicity.[48]

ID verification[edit]

The emerging use of facial recognition is in the use of ID verification services. Many companies and others are working in the market now to provide these services to banks, ICOs, and other e-businesses.[49] Face recognition has been leveraged as a form of biometric authentication for various computing platforms and devices;[23] Android 4.0 "Ice Cream Sandwich" added facial recognition using a smartphone's front camera as a means of unlocking devices,[50][51] while Microsoft introduced face recognition login to its Xbox 360 video game console through its Kinect accessory,[52] as well as Windows 10 via its "Windows Hello" platform (which requires an infrared-illuminated camera).[53] In 2017 Apple's iPhone X smartphone introduced facial recognition to the product line with its "Face ID" platform, which uses an infrared illumination system.[54]

Face ID[edit]

Apple introduced Face ID on the flagship iPhone X as a biometric authentication successor to the Touch ID, a fingerprint based system. Face ID has a facial recognition sensor that consists of two parts: a "Romeo" module that projects more than 30,000 infrared dots onto the user's face, and a "Juliet" module that reads the pattern.[55] The pattern is sent to a local "Secure Enclave" in the device's central processing unit (CPU) to confirm a match with the phone owner's face.[56]

The facial pattern is not accessible by Apple. The system will not work with eyes closed, in an effort to prevent unauthorized access.[56] The technology learns from changes in a user's appearance, and therefore works with hats, scarves, glasses, and many sunglasses, beard and makeup.[57] It also works in the dark. This is done by using a "Flood Illuminator", which is a dedicated infrared flash that throws out invisible infrared light onto the user's face to properly read the 30,000 facial points.[58]

Deployment in security services[edit]

Commonwealth[edit]

The Australian Border Force and New Zealand Customs Service have set up an automated border processing system called SmartGate that uses face recognition, which compares the face of the traveller with the data in the e-passport microchip.[59][60] All Canadian international airports use facial recognition as part of the Primary Inspection Kiosk program that compares a traveler face to their photo stored on the ePassport. This program first came to Vancouver International Airport in early 2017 and was rolled up to all remaining international airports in 2018–2019.[61]

Police forces in the United Kingdom have been trialing live facial recognition technology at public events since 2015.[62] In May 2017, a man was arrested using an automatic facial recognition (AFR) system mounted on a van operated by the South Wales Police. Ars Technica reported that "this appears to be the first time [AFR] has led to an arrest".[63] However, a 2018 report by Big Brother Watch found that these systems were up to 98% inaccurate.[62] The report also revealed that two UK police forces, South Wales Police and the Metropolitan Police, were using live facial recognition at public events and in public spaces.[64] In September 2019, South Wales Police use of facial recognition was ruled lawful.[65] Live facial recognition has been trialled since 2016 in the streets of London and will be used on a regular basis from Metropolitan Police from beginning of 2020.[66] In August 2020 the Court of Appeal ruled that the way the facial recognition system had been used by the South Wales Police in 2017 and 2018 violated human rights.[67]

United States[edit]

The U.S. Department of State operates one of the largest face recognition systems in the world with a database of 117 million American adults, with photos typically drawn from driver's license photos.[68] Although it is still far from completion, it is being put to use in certain cities to give clues as to who was in the photo. The FBI uses the photos as an investigative tool, not for positive identification.[69] As of 2016, facial recognition was being used to identify people in photos taken by police in San Diego and Los Angeles (not on real-time video, and only against booking photos)[70] and use was planned in West Virginia and Dallas.[71]

In recent years Maryland has used face recognition by comparing people's faces to their driver's license photos. The system drew controversy when it was used in Baltimore to arrest unruly protesters after the death of Freddie Gray in police custody.[72] Many other states are using or developing a similar system however some states have laws prohibiting its use.

The FBI has also instituted its Next Generation Identification program to include face recognition, as well as more traditional biometrics like fingerprints and iris scans, which can pull from both criminal and civil databases.[73] The federal General Accountability Office criticized the FBI for not addressing various concerns related to privacy and accuracy.[74]

Starting in 2018, U.S. Customs and Border Protection deployed "biometric face scanners" at U.S. airports. Passengers taking outbound international flights can complete the check-in, security and the boarding process after getting facial images captured and verified by matching their ID photos stored on CBP's database. Images captured for travelers with U.S. citizenship will be deleted within up to 12-hours. TSA had expressed its intention to adopt a similar program for domestic air travel during the security check process in the future. The American Civil Liberties Union is one of the organizations against the program, concerning that the program will be used for surveillance purposes.[75]

In 2019, researchers reported that Immigration and Customs Enforcement uses facial recognition software against state driver's license databases, including for some states that provide licenses to undocumented immigrants.[74]

China[edit]

In 2006, the Skynet Project was initiated by the Chinese Government to implement CCTV surveillance nationwide and as of 2018, there has been 20 million cameras, many of which capable of real-time facial recognition, deployed across the country for this project[76] Some official claim that the current Skynet system can scan the entire Chinese population in one second and the world population in two seconds.[77]

In 2017 the Qingdao police was able to identify twenty-five wanted suspects using facial recognition equipment at the Qingdao International Beer Festival, one of which had been on the run for 10 years.[78] The equipment works by recording a 15-second video clip and taking multiple snapshots of the subject. That data is compared and analyzed with images from the police department's database and within 20 minutes, the subject can be identified with a 98.1% accuracy.[79]

In 2018, Chinese police in Zhengzhou and Beijing were using smart glasses to take photos which are compared against a government database using facial recognition to identify suspects, retrieve an address, and track people moving beyond their home areas.[80][81]

As of late 2017, China has deployed facial recognition and artificial intelligence technology in Xinjiang. Reporters visiting the region found surveillance cameras installed every hundred meters or so in several cities, as well as facial recognition checkpoints at areas like gas stations, shopping centers, and mosque entrances.[82][83] In May 2019, Human Rights Watch reported finding Face++ code in the Integrated Joint Operations Platform (IJOP), a police surveillance app used to collect data on, and track the Uighur community in Xinjiang.[84] Human Rights Watch released a correction to its report in June 2019 stating that the Chinese company Megvii did not appear to have collaborated on IJOP, and that the Face++ code in the app was inoperable.[85] In February 2020, following the Coronavirus outbreak, Megvii applied for a bank loan to optimize the body temperature screening system it had launched to help identify people with symptoms of a Coronavirus infection in crowds. In the loan application Megvii stated that it needed to improve the accuracy of identifying masked individuals.[86]

Many public places in China are implemented with facial recognition equipment, including railway stations, airports, tourist attractions, expos, and office buildings. In October 2019, a professor at Zhejiang Sci-Tech University sued the Hangzhou Safari Park for abusing private biometric information of customers. The safari park uses facial recognition technology to verify the identities of its Year Card holders. An estimated 300 tourist sites in China have installed facial recognition systems and use them to admit visitors. This case is reported to be the first on the use of facial recognition systems in China.[87] In August 2020 Radio Free Asia reported that in 2019 Geng Guanjun, a citizen of Taiyuan City who had used the WeChat app by Tencent to forward a video to a friend in the United States was subsequently convicted on the charge of the crime “picking quarrels and provoking troubles”. The Court documents showed that the Chinese police used a facial recognition system to identify Geng Guanjun as an "overseas democracy activist" and that China's network management and propaganda departments directly monitor WeChat users.[88]

In 2019, Protestors in Hong Kong destroyed smart lampposts amid concerns they could contain cameras and facial recognition system used for surveillance by Chinese authorities.[89]

Latin America[edit]

In the 2000 Mexican presidential election, the Mexican government employed face recognition software to prevent voter fraud. Some individuals had been registering to vote under several different names, in an attempt to place multiple votes. By comparing new face images to those already in the voter database, authorities were able to reduce duplicate registrations.[90]

In Colombia public transport busses are fitted with a facial recognition system by FaceFirst Inc to identify passengers that are sought by the National Police of Colombia. FaceFirst Inc also built the facial recognition system for Tocumen International Airport in Panama. The face recognition system is deployed to identify individuals among the travelers that are sought by the Panamanian National Police or Interpol.[91] Tocumen International Airport operates an airport-wide surveillance system using hundreds of live face recognition cameras to identify wanted individuals passing through the airport. The face recognition system was initially installed as part of a US$11 million contract and included a computer cluster of sixty computers, a fiber-optic cable network for the airport buildings, as well as the installation of 150 surveillance cameras in the airport terminal and at about 30 airport gates.[92]

At the 2014 FIFA World Cup in Brazil the Federal Police of Brazil used face recognition goggles. Face recognition systems "made in China" were also deployed at the 2016 Summer Olympics in Rio de Janeiro.[93] Nuctech Company provided 145 insepction terminals for Maracanã Stadium and 55 terminals for the Deodoro Olympic Park.[94]

European Union[edit]

Police forces in at least 21 countries of the European Union use, or plan to use, facial recognition systems, either for administrative or criminal purposes.[95]

Greece[edit]

Greek police passed a contract with Intracom-Telecom for the provision of at least 1,000 devices equipped with live facial recognition system. The delivery is expected before the summer 2021. The total value of the contract is over 4 million euros, paid for in large part by the Internal Security Fund of the European Commission.[96]

Italy[edit]

Italian police acquired a face recognition system in 2017, Sistema Automatico Riconoscimento Immagini (SARI). In November 2020, the Interior ministry announced plans to use it in real-time to identify people suspected of seeking asylum.[97]

The Netherlands[edit]

The Netherlands has deployed facial recognition and artificial intelligence technology since 2016.[98] The database of the Dutch police currently contains over 2.2 million pictures of 1.3 million Dutch citizens. This accounts for about 8% of the population. Hundreds of cameras have been deployed in the city of Amsterdam alone.[99]

South Africa[edit]

In South Africa, in 2016, the city of Johannesburg announced it was rolling out smart CCTV cameras complete with automatic number plate recognition and facial recognition.[100]

Deployment in retail stores[edit]

The US firm 3VR, now Identiv, is an example of a vendor which began offering facial recognition systems and services to retailers as early as 2007.[101] In 2012 the company advertised benefits such as "dwell and queue line analytics to decrease customer wait times", "facial surveillance analytic[s] to facilitate personalized customer greetings by employees" and the ability to "[c]reate loyalty programs by combining point of sale (POS) data with facial recognition".[102]

United States[edit]

In 2018 the National Retail Federation Loss Prevention Research Council called facial recognition technology "a promising new tool" worth evaluating.[103]

In July 2020, the Reuters news agency reported that during the 2010s the pharmacy chain Rite Aid had deployed facial recognition video surveillance systems and components from FaceFirst, DeepCam LLC, and other vendors at some retail locations in the United States.[103] Cathy Langley, Rite Aid's vice president of asset protection, used the phrase "feature matching" to refer to the systems and said that usage of the systems resulted in less violence and organized crime in the company's stores, while former vice president of asset protection Bob Oberosler emphasized improved safety for staff and a reduced need for the involvement of law enforcement organizations.[103] In a 2020 statement to Reuters in response to the reporting, Rite Aid said that it had ceased using the facial recognition software and switched off the cameras.[103]

According to director Read Hayes of the National Retail Federation Loss Prevention Research Council, Rite Aid's surveillance program was either the largest or one of the largest programs in retail.[103] The Home Depot, Menards, Walmart, and 7-Eleven are among other US retailers also engaged in large-scale pilot programs or deployments of facial recognition technology.[103]

Of the Rite Aid stores examined by Reuters in 2020, those in communities where people of color made up the largest racial or ethnic group were three times as likely to have the technology installed,[103] raising concerns related to the substantial history of racial segregation and racial profiling in the United States. Rite Aid said that the selection of locations was "data-driven", based on the theft histories of individual stores, local and national crime data, and site infrastructure.[103]

Additional uses[edit]

At the American football championship game Super Bowl XXXV in January 2001, police in Tampa Bay, Florida used Viisage face recognition software to search for potential criminals and terrorists in attendance at the event. 19 people with minor criminal records were potentially identified.[104][105]

Face recognition systems have also been used by photo management software to identify the subjects of photographs, enabling features such as searching images by person, as well as suggesting photos to be shared with a specific contact if their presence were detected in a photo.[106][107] By 2008 facial recognition systems were typically used as access control in security systems.[108]

The United States' popular music and country music celebrity Taylor Swift surreptitiously employed facial recognition technology at a concert in 2018. The camera was embedded in a kiosk near a ticket booth and scanned concert-goers as they entered the facility for known stalkers.[109]

On August 18, 2019, The Times reported that the UAE-owned Manchester City hired a Texas-based firm, Blink Identity, to deploy facial recognition systems in a driver program. The club has planned a single super-fast lane for the supporters at the Etihad stadium.[110] However, civil rights groups cautioned the club against the introduction of this technology, saying that it would risk "normalising a mass surveillance tool". The policy and campaigns officer at Liberty, Hannah Couchman said that Man City's move is alarming, since the fans will be obliged to share deeply sensitive personal information with a private company, where they could be tracked and monitored in their everyday lives.[111]

In August 2020, amid the COVID-19 pandemic in the United States, American football stadiums of New York and Los Angeles announced the installation of facial recognition for upcoming matches. The purpose is to make the entry process as touchless as possible.[112] Disney's Magic Kingdom, near Orlando, Florida, likewise announced a test of facial recognition technology to create a touchless experience during the pandemic; the test was originally slated to take place between March 23 and April 23, 2021 but the limited timeframe had been removed as of late April.[113]

Advantages and disadvantages[edit]

Compared to other biometric systems[edit]

In 2006, the performance of the latest face recognition algorithms was evaluated in the Face Recognition Grand Challenge (FRGC). High-resolution face images, 3-D face scans, and iris images were used in the tests. The results indicated that the new algorithms are 10 times more accurate than the face recognition algorithms of 2002 and 100 times more accurate than those of 1995. Some of the algorithms were able to outperform human participants in recognizing faces and could uniquely identify identical twins.[30][114]

One key advantage of a facial recognition system that it is able to perform mass identification as it does not require the cooperation of the test subject to work. Properly designed systems installed in airports, multiplexes, and other public places can identify individuals among the crowd, without passers-by even being aware of the system.[115] However, as compared to other biometric techniques, face recognition may not be most reliable and efficient. Quality measures are very important in facial recognition systems as large degrees of variations are possible in face images. Factors such as illumination, expression, pose and noise during face capture can affect the performance of facial recognition systems.[115] Among all biometric systems, facial recognition has the highest false acceptance and rejection rates,[115] thus questions have been raised on the effectiveness of face recognition software in cases of railway and airport security. [116]

Weaknesses[edit]

Ralph Gross, a researcher at the Carnegie Mellon Robotics Institute in 2008, describes one obstacle related to the viewing angle of the face: "Face recognition has been getting pretty good at full frontal faces and 20 degrees off, but as soon as you go towards profile, there've been problems."[30] Besides the pose variations, low-resolution face images are also very hard to recognize. This is one of the main obstacles of face recognition in surveillance systems.[117]

Face recognition is less effective if facial expressions vary. A big smile can render the system less effective. For instance: Canada, in 2009, allowed only neutral facial expressions in passport photos.[118]

There is also inconstancy in the datasets used by researchers. Researchers may use anywhere from several subjects to scores of subjects and a few hundred images to thousands of images. It is important for researchers to make available the datasets they used to each other, or have at least a standard dataset.[119]

Ineffectiveness[edit]

Critics of the technology complain that the London Borough of Newham scheme has, as of 2004, never recognized a single criminal, despite several criminals in the system's database living in the Borough and the system has been running for several years. "Not once, as far as the police know, has Newham's automatic face recognition system spotted a live target."[105][120] This information seems to conflict with claims that the system was credited with a 34% reduction in crime (hence why it was rolled out to Birmingham also).[121]

An experiment in 2002 by the local police department in Tampa, Florida, had similarly disappointing results.[105] A system at Boston's Logan Airport was shut down in 2003 after failing to make any matches during a two-year test period.[122]

In 2014, Facebook stated that in a standardized two-option facial recognition test, its online system scored 97.25% accuracy, compared to the human benchmark of 97.5%.[123]

Systems are often advertised as having accuracy near 100%; this is misleading as the studies often use much smaller sample sizes than would be necessary for large scale applications. Because facial recognition is not completely accurate, it creates a list of potential matches. A human operator must then look through these potential matches and studies show the operators pick the correct match out of the list only about half the time. This causes the issue of targeting the wrong suspect.[69][124]

Controversies[edit]

Privacy violations[edit]

Civil rights organizations and privacy campaigners such as the Electronic Frontier Foundation, Big Brother Watch and the ACLU express concern that privacy is being compromised by the use of surveillance technologies.[125][62][126] Face recognition can be used not just to identify an individual, but also to unearth other personal data associated with an individual – such as other photos featuring the individual, blog posts, social media profiles, Internet behavior, and travel patterns.[127] Concerns have been raised over who would have access to the knowledge of one's whereabouts and people with them at any given time.[128] Moreover, individuals have limited ability to avoid or thwart face recognition tracking unless they hide their faces. This fundamentally changes the dynamic of day-to-day privacy by enabling any marketer, government agency, or random stranger to secretly collect the identities and associated personal information of any individual captured by the face recognition system.[127] Consumers may not understand or be aware of what their data is being used for, which denies them the ability to consent to how their personal information gets shared.[128]

In July 2015, the United States Government Accountability Office conducted a Report to the Ranking Member, Subcommittee on Privacy, Technology and the Law, Committee on the Judiciary, U.S. Senate. The report discussed facial recognition technology's commercial uses, privacy issues, and the applicable federal law. It states that previously, issues concerning facial recognition technology were discussed and represent the need for updating the privacy laws of the United States so that federal law continually matches the impact of advanced technologies. The report noted that some industry, government, and private organizations were in the process of developing, or have developed, "voluntary privacy guidelines". These guidelines varied between the stakeholders, but their overall aim was to gain consent and inform citizens of the intended use of facial recognition technology. According to the report the voluntary privacy guidelines helped to counteract the privacy concerns that arise when citizens are unaware of how their personal data gets put to use.[128]

In 2016 Russian company NtechLab caused a privacy scandal in the international media when it launched the FindFace face recognition system with the promise that Russian users could take photos of strangers in the street and link them to a social media profile on the social media platform Vkontakte (VT).[129] In December 2017, Facebook rolled out a new feature that notifies a user when someone uploads a photo that includes what Facebook thinks is their face, even if they are not tagged. Facebook has attempted to frame the new functionality in a positive light, amidst prior backlashes.[130] Facebook's head of privacy, Rob Sherman, addressed this new feature as one that gives people more control over their photos online. “We’ve thought about this as a really empowering feature,” he says. “There may be photos that exist that you don’t know about.”[131] Facebook's DeepFace has become the subject of several class action lawsuits under the Biometric Information Privacy Act, with claims alleging that Facebook is collecting and storing face recognition data of its users without obtaining informed consent, in direct violation of the 2008 Biometric Information Privacy Act (BIPA).[132] The most recent case was dismissed in January 2016 because the court lacked jurisdiction.[133] In the US, surveillance companies such as Clearview AI are relying on the First Amendment to the United States Constitution to data scrape user accounts on social media platforms for data that can be used in the development of facial recognition systems.[134]

In 2019 the Financial Times first reported that facial recognition software was in use in the King's Cross area of London.[135] The development around London's King's Cross mainline station includes shops, offices, Google's UK HQ and part of St Martin's College. According to the UK Information Commissioner's Office: "Scanning people's faces as they lawfully go about their daily lives, in order to identify them, is a potential threat to privacy that should concern us all."[136][137] The UK Information Commissioner Elizabeth Denham launched an investigation into the use of the King's Cross facial recognition system, operated by the company Argent. In September 2019 it was announced by Argent that facial recognition software would no longer be used at King's Cross. Argent claimed that the software had been deployed between May 2016 and March 2018 on two cameras covering a pedestrian street running through the centre of the development.[138] In October 2019 a report by the deputy London mayor Sophie Linden revealed that in a secret deal the Metropolitan Police had passed photos of seven people to Argent for use in their King's cross facial recognition system.[139]

Imperfect technology in law enforcement[edit]

It is still contested as to whether or not facial recognition technology works less accurately on people of color.[140] One study by Joy Buolamwini (MIT Media Lab) and Timnit Gebru (Microsoft Research) found that the error rate for gender recognition for women of color within three commercial facial recognition systems ranged from 23.8% to 36%, whereas for lighter-skinned men it was between 0.0 and 1.6%. Overall accuracy rates for identifying men (91.9%) were higher than for women (79.4%), and none of the systems accommodated a non-binary understanding of gender.[141] It also showed that the datasets used to train commercial facial recognition models were unrepresentative of the broader population and skewed toward lighter-skinned males. However, another study showed that several commercial facial recognition software sold to law enforcement offices around the country had a lower false non-match rate for black people than for white people.[142]

Experts fear that face recognition systems may actually be hurting citizens the police claims they are trying to protect.[143] It is considered an imperfect biometric, and in a study conducted by Georgetown University researcher Clare Garvie, she concluded that "there’s no consensus in the scientific community that it provides a positive identification of somebody.”[144] It is believed that with such large margins of error in this technology, both legal advocates and facial recognition software companies say that the technology should only supply a portion of the case – no evidence that can lead to an arrest of an individual.[144] The lack of regulations holding facial recognition technology companies to requirements of racially biased testing can be a significant flaw in the adoption of use in law enforcement. CyberExtruder, a company that markets itself to law enforcement said that they had not performed testing or research on bias in their software. CyberExtruder did note that some skin colors are more difficult for the software to recognize with current limitations of the technology. “Just as individuals with very dark skin are hard to identify with high significance via facial recognition, individuals with very pale skin are the same,” said Blake Senftner, a senior software engineer at CyberExtruder.[144]

Data protection[edit]

In 2010 Peru passed the Law for Personal Data Protection, which defines biometric information that can be used to identify an individual as sensitive data. In 2012 Colombia passed a comprehensive Data Protection Law which defines biometric data as senstivite information.[145] According to Article 9(1) of the EU's 2016 General Data Protection Regulation (GDPR) the processing of biometric data for the purpose of "uniquely identifying a natural person" is sensitive and the facial recognition data processed in this way becomes sensitive personal data. In response to the GDPR passing into the law of EU member states, EU based researchers voiced concern that if they were required under the GDPR to obtain individual's consent for the processing of their facial recognition data, a face database on the scale of MegaFace could never be established again.[146] In September 2019 the Swedish Data Protection Authority (DPA) issued its first ever financial penalty for a violation of the EU's General Data Protection Regulation (GDPR) against a school that was using the technology to replace time-consuming roll calls during class. The DPA found that the school illegally obtained the biometric data of its students without completing an impact assessment. In addition the school did not make the DPA aware of the pilot scheme. A 200,000 SEK fine (€19,000/$21,000) was issued.[147]

In the United States of America several U.S. states have passed laws to protect the privacy of biometric data. Examples include the Illinois Biometric Information Privacy Act (BIPA) and the California Consumer Privacy Act (CCPA).[148] In March 2020 California residents filed a class action against Clearview AI, alleging that the company had illegally collected biometric data online and with the help of face recognition technology built up a database of biometric data which was sold to companies and police forces. At the time Clearview AI already faced two lawsuits under BIPA[149] and an investigation by the Privacy Commissioner of Canada for compliance with the Personal Information Protection and Electronic Documents Act (PIPEDA).[150]

Bans on the use of facial recognition technology[edit]

In May 2019, San Francisco, California became the first major United States city to ban the use of facial recognition software for police and other local government agencies' usage.[151] San Francisco Supervisor, Aaron Peskin, introduced regulations that will require agencies to gain approval from the San Francisco Board of Supervisors to purchase surveillance technology.[152] The regulations also require that agencies publicly disclose the intended use for new surveillance technology.[152] In June 2019, Somerville, Massachusetts became the first city on the East Coast to ban face surveillance software for government use,[153] specifically in police investigations and municipal surveillance.[154] In July 2019, Oakland, California banned the usage of facial recognition technology by city departments.[155]

The American Civil Liberties Union ("ACLU") has campaigned across the United States for transparency in surveillance technology[154] and has supported both San Francisco and Somerville's ban on facial recognition software. The ACLU works to challenge the secrecy and surveillance with this technology.[citation needed][156]

In January 2020, the European Union suggested, but then quickly scrapped, a proposed moratorium on facial recognition in public spaces.[157][158]

During the George Floyd protests, use of facial recognition by city government was banned in Boston, Massachusetts.[159] As of June 10, 2020, municipal use has been banned in:[160]

- Berkeley, California

- Oakland, California

- Boston, Massachusetts - June 30, 2020[161]

- Brookline, Massachusetts

- Cambridge, Massachusetts

- Northampton, Massachusetts

- Springfield, Massachusetts

- Somerville, Massachusetts

- Portland, Oregon - September, 2020[162]

On October 27, 2020, 22 human rights groups called upon the University Of Miami to ban facial recognition technology. This came after the students accused the school of using the software to identify student protesters. The allegations were, however, denied by the university.[163]

Emotion recognition[edit]

In the 18th and 19th century the belief that facial expressions revealed the moral worth or true inner state of a human was widespread and physiognomy was a respected science in the Western world. From the early 19th century onwards photography was used in the physiognomic analysis of facial features and facial expression to detect insanity and dementia.[164] In the 1960s and 1970s the study of human emotions and its expressions was reinvented by psychologists, who tried to define a normal range of emotional responses to events.[165] The research on automated emotion recognition has since the 1970s focused on facial expressions and speech, which are regarded as the two most important ways in which humans communicate emotions to other humans. In the 1970s the Facial Action Coding System (FACS) categorization for the physical expression of emotions was established.[166] Its developer Paul Ekman maintains that there are six emotions that are universal to all human beings and that these can be coded in facial expressions.[167] Research into automatic emotion specific expression recognition has in the past decades focused on frontal view images of human faces.[168]

In 2016 facial feature emotion recognition algorithms were among the new technologies, alongside high-definition CCTV, high resolution 3D face recognition and iris recognition, that found their way out of university research labs.[169] In 2016 Facebook acquired FacioMetrics, a facial feature emotion recognition corporate spin-off by Carnegie Mellon University. In the same year Apple Inc. acquired the facial feature emotion recognition start-up Emotient.[170] By the end of 2016 commercial vendors of facial recognition systems offered to integrate and deploy emotion recognition algorithms for facial features.[171] The MIT's Media Lab spin-off Affectiva[172] by late 2019 offered a facial expression emotion detection product that can recognize emotions in humans while driving. [173]

Anti-facial recognition systems[edit]

In January 2013 Japanese researchers from the National Institute of Informatics created 'privacy visor' glasses that use nearly infrared light to make the face underneath it unrecognizable to face recognition software.[174] The latest version uses a titanium frame, light-reflective material and a mask which uses angles and patterns to disrupt facial recognition technology through both absorbing and bouncing back light sources. Some projects use adversarial machine learning to come up with new printed patterns that confuse existing face recognition software.[179]

Another method to protect from facial recognition systems are specific haircuts and make-up patterns that prevent the used algorithms to detect a face, known as computer vision dazzle.[180] Incidentally, the makeup styles popular with Juggalos can also protect against facial recognition.[181]

Facial masks that are worn to protect from contagious viruses can reduce the accuracy of facial recognition systems. A 2020 NIST study tested popular one-to-one matching systems and found a failure rate between five and fifty percent on masked individuals. The Verge speculated that the accuracy rate of mass surveillance systems, which were not included in the study, would be even less accurate than the accuracy of one-to-one matching systems.[182] The facial recognition of Apple Pay can work through many barriers, including heavy makeup, thick beards and even sunglasses, but fails with masks.[183]

* What Is Computer Vision

Computer vision is an interdisciplinary scientific field that deals with how computers can gain high-level understanding from digital images or videos. From the perspective of engineering, it seeks to understand and automate tasks that the human visual system can do.[1][2][3]

Computer vision tasks include methods for acquiring, processing, analyzing and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the forms of decisions.[4][5][6][7] Understanding in this context means the transformation of visual images (the input of the retina) into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory.[8]

The scientific discipline of computer vision is concerned with the theory behind artificial systems that extract information from images. The image data can take many forms, such as video sequences, views from multiple cameras, multi-dimensional data from a 3D scanner, or medical scanning device. The technological discipline of computer vision seeks to apply its theories and models to the construction of computer vision systems.

Sub-domains of computer vision include scene reconstruction, object detection, event detection, video tracking, object recognition, 3D pose estimation, learning, indexing, motion estimation, visual servoing, 3D scene modeling, and image restoration.[6]

Definition[edit]

Computer vision is an interdisciplinary field that deals with how computers can be made to gain high-level understanding from digital images or videos. From the perspective of engineering, it seeks to automate tasks that the human visual system can do.[1][2][3] "Computer vision is concerned with the automatic extraction, analysis and understanding of useful information from a single image or a sequence of images. It involves the development of a theoretical and algorithmic basis to achieve automatic visual understanding."[9] As a scientific discipline, computer vision is concerned with the theory behind artificial systems that extract information from images. The image data can take many forms, such as video sequences, views from multiple cameras, or multi-dimensional data from a medical scanner.[10] As a technological discipline, computer vision seeks to apply its theories and models for the construction of computer vision systems.

History[edit]

In the late 1960s, computer vision began at universities which were pioneering artificial intelligence. It was meant to mimic the human visual system, as a stepping stone to endowing robots with intelligent behavior.[11] In 1966, it was believed that this could be achieved through a summer project, by attaching a camera to a computer and having it "describe what it saw".[12][13]

What distinguished computer vision from the prevalent field of digital image processing at that time was a desire to extract three-dimensional structure from images with the goal of achieving full scene understanding. Studies in the 1970s formed the early foundations for many of the computer vision algorithms that exist today, including extraction of edges from images, labeling of lines, non-polyhedral and polyhedral modeling, representation of objects as interconnections of smaller structures, optical flow, and motion estimation.[11]

The next decade saw studies based on more rigorous mathematical analysis and quantitative aspects of computer vision. These include the concept of scale-space, the inference of shape from various cues such as shading, texture and focus, and contour models known as snakes. Researchers also realized that many of these mathematical concepts could be treated within the same optimization framework as regularization and Markov random fields.[14] By the 1990s, some of the previous research topics became more active than the others. Research in projective 3-D reconstructions led to better understanding of camera calibration. With the advent of optimization methods for camera calibration, it was realized that a lot of the ideas were already explored in bundle adjustment theory from the field of photogrammetry. This led to methods for sparse 3-D reconstructions of scenes from multiple images. Progress was made on the dense stereo correspondence problem and further multi-view stereo techniques. At the same time, variations of graph cut were used to solve image segmentation. This decade also marked the first time statistical learning techniques were used in practice to recognize faces in images (see Eigenface). Toward the end of the 1990s, a significant change came about with the increased interaction between the fields of computer graphics and computer vision. This included image-based rendering, image morphing, view interpolation, panoramic image stitching and early light-field rendering.[11]

Recent work has seen the resurgence of feature-based methods, used in conjunction with machine learning techniques and complex optimization frameworks.[15][16] The advancement of Deep Learning techniques has brought further life to the field of computer vision. The accuracy of deep learning algorithms on several benchmark computer vision data sets for tasks ranging from classification, segmentation and optical flow has surpassed prior methods.[citation needed]

Related fields[edit]

Solid-state physics[edit]

Solid-state physics is another field that is closely related to computer vision. Most computer vision systems rely on image sensors, which detect electromagnetic radiation, which is typically in the form of either visible or infra-red light. The sensors are designed using quantum physics. The process by which light interacts with surfaces is explained using physics. Physics explains the behavior of optics which are a core part of most imaging systems. Sophisticated image sensors even require quantum mechanics to provide a complete understanding of the image formation process.[11] Also, various measurement problems in physics can be addressed using computer vision, for example motion in fluids.

Neurobiology[edit]